TungstenFabric のビルド方法

TungstenFabric をビルドしてみたときのメモとなる。

ベースとして、以下のレポジトリの記載を使用している。

https://github.com/Juniper/contrail-dev-env

ビルドするソースのタグとしては、r5.0.1 を使用した。

ビルド環境としては、aws 上のEC2 インスタンス (centos7.5, ami-3185744e, c5.xlarge, disk 30GB) を使用している。

# yum install -y git docker # git clone -b R5.0 https://github.com/Juniper/contrail-dev-env # ./startup.sh -t r5.0.1 <- 5分程度 # docker attach contrail-developer-sandbox # cd /root/contrail-dev-env # make sync <- 2分程度 # make fetch_packages <- 1分程度 # make setup <- 2分程度 # make dep <- 1分程度 # make rpm <- 60分程度 # make containers <- 30分程度

ビルド実行後、以下のように、rpm, 及び、 docker image ができていることを確認できた。

## make rpm 後 [root@cee5c820ba1b RPMS]# pwd /root/contrail/RPMS [root@cee5c820ba1b RPMS]# ls noarch repodata x86_64 [root@cee5c820ba1b RPMS]# find . . ./noarch ./noarch/contrail-vcenter-manager-5.0.1-122620181030.el7.noarch.rpm ./noarch/contrail-tripleo-puppet-5.0.1-122620181030.el7.noarch.rpm ./noarch/contrail-fabric-utils-5.0.1-122620181030.noarch.rpm ./noarch/contrail-heat-5.0.1-122620181030.el7.noarch.rpm ./noarch/ironic-notification-manager-5.0.1-122620181030.el7.noarch.rpm ./noarch/neutron-plugin-contrail-5.0.1-122620181030.el7.noarch.rpm ./noarch/contrail-setup-5.0.1-122620181030.el7.noarch.rpm ./noarch/contrail-config-5.0.1-122620181030.el7.noarch.rpm ./noarch/contrail-manifest-5.0.1-122620181030.el7.noarch.rpm ./x86_64 ./x86_64/contrail-web-core-5.0.1-122620181030.x86_64.rpm ./x86_64/contrail-vrouter-init-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-vrouter-dpdk-init-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-web-controller-5.0.1-122620181030.x86_64.rpm ./x86_64/contrail-nodemgr-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-nodemgr-debuginfo-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-vrouter-dpdk-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-vrouter-dpdk-debuginfo-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-vcenter-plugin-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/libcontrail-java-api-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/libcontrail-vrouter-java-api-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/libcontrail-vijava-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-debuginfo-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-vrouter-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-vrouter-source-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-config-openstack-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/python-contrail-vrouter-api-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/python-contrail-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-vrouter-utils-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-vrouter-agent-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-control-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/python-opencontrail-vrouter-netns-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-lib-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-analytics-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-dns-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-nova-vif-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-utils-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-docs-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-fabric-utils-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-test-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-kube-manager-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-mesos-manager-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-kube-cni-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-cni-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-k8s-cni-5.0.1-122620181030.el7.x86_64.rpm ./x86_64/contrail-mesos-cni-5.0.1-122620181030.el7.x86_64.rpm ./repodata ./repodata/ab700c4d976d0c2186618a8bc2219e33c3407177d45efd7809a73038e1468310-other.sqlite.bz2 ./repodata/8eae700f5b480efd71c24e154449a693a73ee03b9f30ed5d9a7c9da00a9d5920-other.xml.gz ./repodata/6dcee29e0689f1e96e40b1b65375e4225b47a463a9729a6688fba4ef5d28307b-filelists.sqlite.bz2 ./repodata/ac47c8a245b62760210729474c6e2cbbf158a6224bcb1209b41f9b0a106bf134-filelists.xml.gz ./repodata/6e347f2302a7edc66f827185b10bbfea442823ea218f7e275b8484dc29459af5-primary.sqlite.bz2 ./repodata/e988c233d42d947e19b19269c0f34b8e11359b89bdfe794d59547139bb0ca832-primary.xml.gz ./repodata/repomd.xml [root@cee5c820ba1b RPMS]# ## make containers 後 [root@cee5c820ba1b contrail]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE 172.17.0.1:6666/contrail-vrouter-plugin-mellanox-init-ubuntu queens-dev cdca613081c8 50 seconds ago 539 MB 172.17.0.1:6666/contrail-vrouter-plugin-mellanox-init-redhat queens-dev bede1afc91dd 2 minutes ago 707 MB 172.17.0.1:6666/contrail-vrouter-kernel-init-dpdk queens-dev f65a1403e650 2 minutes ago 1.05 GB 172.17.0.1:6666/contrail-vrouter-kernel-init queens-dev 54952787a678 3 minutes ago 885 MB 172.17.0.1:6666/contrail-vrouter-kernel-build-init queens-dev d551cee2d300 4 minutes ago 259 MB 172.17.0.1:6666/contrail-vrouter-agent-dpdk queens-dev 6c9e37cdb493 5 minutes ago 1.07 GB 172.17.0.1:6666/contrail-vrouter-agent queens-dev 8f00e230415e 6 minutes ago 1.08 GB 172.17.0.1:6666/contrail-vrouter-base queens-dev 36a7af582a2c 8 minutes ago 846 MB 172.17.0.1:6666/contrail-vcenter-plugin queens-dev fbb15449cf8e 8 minutes ago 936 MB 172.17.0.1:6666/contrail-vcenter-manager queens-dev c1ed4ea64e5d 10 minutes ago 809 MB 172.17.0.1:6666/contrail-status queens-dev ee2a40e70262 11 minutes ago 725 MB 172.17.0.1:6666/contrail-openstack-neutron-init queens-dev 65bb2098143b 11 minutes ago 891 MB 172.17.0.1:6666/contrail-openstack-ironic-notification-manager queens-dev 1b3d34a967fe 12 minutes ago 818 MB 172.17.0.1:6666/contrail-openstack-heat-init queens-dev 6745df23be26 13 minutes ago 724 MB 172.17.0.1:6666/contrail-openstack-compute-init queens-dev 9fda3a9c5aad 13 minutes ago 724 MB 172.17.0.1:6666/contrail-nodemgr queens-dev 3477b7e6e827 13 minutes ago 743 MB 172.17.0.1:6666/contrail-node-init queens-dev 30c46fa720d4 14 minutes ago 724 MB 172.17.0.1:6666/contrail-mesosphere-mesos-manager queens-dev 0107dd077044 14 minutes ago 735 MB 172.17.0.1:6666/contrail-mesosphere-cni-init queens-dev d9702f349430 14 minutes ago 741 MB 172.17.0.1:6666/contrail-kubernetes-kube-manager queens-dev cdf9e57d4bb2 15 minutes ago 737 MB 172.17.0.1:6666/contrail-kubernetes-cni-init queens-dev 07f37d06d988 15 minutes ago 742 MB 172.17.0.1:6666/contrail-external-zookeeper queens-dev 757b1d2c5365 15 minutes ago 144 MB 172.17.0.1:6666/contrail-external-tftp queens-dev dcd2ac0d7d39 15 minutes ago 460 MB 172.17.0.1:6666/contrail-external-redis queens-dev 968a77ec9c76 16 minutes ago 107 MB 172.17.0.1:6666/contrail-external-rabbitmq queens-dev c898aba7236b 16 minutes ago 189 MB 172.17.0.1:6666/contrail-external-kafka queens-dev a2a2af6f8e39 16 minutes ago 681 MB 172.17.0.1:6666/contrail-external-dhcp queens-dev 3745bad0ba64 17 minutes ago 467 MB 172.17.0.1:6666/contrail-external-cassandra queens-dev 3589634aa302 18 minutes ago 323 MB 172.17.0.1:6666/contrail-debug queens-dev 889aa106d010 19 minutes ago 1.87 GB 172.17.0.1:6666/contrail-controller-webui-job queens-dev 04293efbe9ac 19 minutes ago 948 MB 172.17.0.1:6666/contrail-controller-webui-web queens-dev 5de1dfeda6ba 19 minutes ago 948 MB 172.17.0.1:6666/contrail-controller-webui-base queens-dev 98d4aaf39d89 20 minutes ago 948 MB 172.17.0.1:6666/contrail-controller-control-named queens-dev 7c18f65d40da 20 minutes ago 792 MB 172.17.0.1:6666/contrail-controller-control-dns queens-dev 4b5c475bb8ed 20 minutes ago 792 MB 172.17.0.1:6666/contrail-controller-control-control queens-dev 0b0199d28225 20 minutes ago 792 MB 172.17.0.1:6666/contrail-controller-control-base queens-dev 8beb513777c4 20 minutes ago 792 MB 172.17.0.1:6666/contrail-controller-config-svcmonitor queens-dev c8fca7845155 21 minutes ago 1.03 GB 172.17.0.1:6666/contrail-controller-config-schema queens-dev 6053aa0d347c 21 minutes ago 1.03 GB 172.17.0.1:6666/contrail-controller-config-devicemgr queens-dev 708996f60ebe 21 minutes ago 1.07 GB 172.17.0.1:6666/contrail-controller-config-api queens-dev e59fa342b203 21 minutes ago 1.09 GB 172.17.0.1:6666/contrail-controller-config-base queens-dev a35b5240aaaf 22 minutes ago 1 GB 172.17.0.1:6666/contrail-analytics-topology queens-dev 657d98319dbc 23 minutes ago 876 MB 172.17.0.1:6666/contrail-analytics-snmp-collector queens-dev a9a1ebd3d9b4 23 minutes ago 876 MB 172.17.0.1:6666/contrail-analytics-query-engine queens-dev 1ea2b9a539b3 23 minutes ago 876 MB 172.17.0.1:6666/contrail-analytics-collector queens-dev f050cc3b9a60 23 minutes ago 876 MB 172.17.0.1:6666/contrail-analytics-api queens-dev 4870701a3ea1 23 minutes ago 876 MB 172.17.0.1:6666/contrail-analytics-alarm-gen queens-dev ee90a0e68d8a 23 minutes ago 876 MB 172.17.0.1:6666/contrail-analytics-base queens-dev 14fb95916e8b 23 minutes ago 876 MB 172.17.0.1:6666/contrail-base queens-dev df725de0a74a 25 minutes ago 700 MB 172.17.0.1:6666/contrail-general-base queens-dev e72dde99d406 26 minutes ago 439 MB opencontrail/developer-sandbox centos-7.4 88e86c876df3 About an hour ago 2.08 GB docker.io/registry 2 9c1f09fe9a86 5 days ago 33.3 MB docker.io/sebp/lighttpd latest 373b9578e885 4 weeks ago 12.2 MB docker.io/ubuntu 16.04 a51debf7e1eb 5 weeks ago 116 MB docker.io/centos 7.4.1708 295a0b2bd8ea 2 months ago 197 MB docker.io/opencontrail/developer-sandbox r5.0.1 618d6ada8c57 3 months ago 1.95 GB docker.io/cassandra 3.11.2 1d46448d0e52 4 months ago 323 MB docker.io/zookeeper 3.4.10 d9fe1374256f 13 months ago 144 MB docker.io/redis 4.0.2 8f2e175b3bd1 13 months ago 107 MB docker.io/rabbitmq 3.6.10-management f10fce4f4bb8 17 months ago 124 MB

TungstenFabric r5.0.1 (kolla queens) のインストール

nightly build とは別に、TungstenFabricの stable版 (r5.0.1, https://hub.docker.com/u/tungstenfabric/ ) が出たので、kolla queens 用のinstance.yaml を貼っておく。

EC2 インスタンスは以下のリンクと同様、centos7.5(ami-3185744e, t2.2xlarge, disk 20GB) を使用した。

http://aaabbb-200904.hatenablog.jp/entry/2018/04/28/215922

yum -y install epel-release git ansible-2.4.2.0

ssh-keygen

cd .ssh/

cat id_rsa.pub >> authorized_keys

ssh ec2インスタンスのip # ip を .ssh/known_hosts に登録

cd

git clone -b R5.0 http://github.com/Juniper/contrail-ansible-deployer

cd contrail-ansible-deployer

vi config/instances.yaml

(以下を記述)

provider_config:

bms:

ssh_pwd: root

ssh_user: root

domainsuffix: local

ntpserver: 0.centos.pool.ntp.org

instances:

bms1:

provider: bms

ip: 172.31.2.76 # ec2インスタンスのip

roles:

config_database:

config:

control:

analytics_database:

analytics:

webui:

vrouter:

openstack:

openstack_compute:

contrail_configuration:

RABBITMQ_NODE_PORT: 5673

AUTH_MODE: keystone

KEYSTONE_AUTH_URL_VERSION: /v3

CONTRAIL_CONTAINER_TAG: r5.0.1

OPENSTACK_VERSION: queens

kolla_config:

kolla_globals:

enable_haproxy: no

enable_swift: no

kolla_passwords:

keystone_admin_password: contrail123

global_configuration:

CONTAINER_REGISTRY: tungstenfabric

ansible-playbook -i inventory/ playbooks/configure_instances.yml

※ 10分ほどかかる

ansible-playbook -i inventory/ playbooks/install_openstack.yml

※ 20分ほどかかる

ansible-playbook -i inventory/ -e orchestrator=openstack playbooks/install_contrail.yml

※ 10分ほどかかる一点変更点として、CONTRAIL_VERSION ではなく、CONTRAIL_CONTAINER_TAG を使うようにしている。

※ CONTRAIL_VERSION の場合、OPENSTACK_VERSION との組み合わせでタグが決まるのだが、(r5.0.1-queens など) r5.0.1 のリリースでは、dockerhub 側に OPENSTACK_VERSION を含んだタグが振られていないため

インストール後は、cirros 2台を作り、間で疎通が可能なことを確認している

[root@ip-172-31-2-76 ~]# . /etc/kolla/kolla-toolbox/admin-openrc.sh [root@ip-172-31-2-76 ~]# [root@ip-172-31-2-76 ~]# [root@ip-172-31-2-76 ~]# openstack server list +--------------------------------------+----------+--------+----------------------+---------+---------+ | ID | Name | Status | Networks | Image | Flavor | +--------------------------------------+----------+--------+----------------------+---------+---------+ | 4d52ab7c-7d43-48c4-b345-010ebd1ca858 | test_vm2 | ACTIVE | testvn=192.168.100.4 | cirros2 | m1.tiny | | 72717182-5cd5-405d-85cd-fd5a1d895ae6 | test_vm1 | ACTIVE | testvn=192.168.100.3 | cirros2 | m1.tiny | +--------------------------------------+----------+--------+----------------------+---------+---------+ [root@ip-172-31-2-76 ~]# ip route default via 172.31.0.1 dev vhost0 169.254.0.1 dev vhost0 proto 109 scope link 169.254.0.3 dev vhost0 proto 109 scope link 169.254.0.4 dev vhost0 proto 109 scope link 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 172.31.0.0/20 dev vhost0 proto kernel scope link src 172.31.2.76 [root@ip-172-31-2-76 ~]# [root@ip-172-31-2-76 ~]# ssh cirros@169.254.0.3 cirros@169.254.0.3's password: $ $ ping 192.168.100.1 PING 192.168.100.1 (192.168.100.1): 56 data bytes 64 bytes from 192.168.100.1: seq=0 ttl=64 time=20.765 ms 64 bytes from 192.168.100.1: seq=1 ttl=64 time=4.311 ms ^C --- 192.168.100.1 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 4.311/12.538/20.765 ms $ $ ping 192.168.100.4 PING 192.168.100.4 (192.168.100.4): 56 data bytes 64 bytes from 192.168.100.4: seq=0 ttl=64 time=8.508 ms 64 bytes from 192.168.100.4: seq=1 ttl=64 time=2.377 ms ^C --- 192.168.100.4 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 2.377/5.442/8.508 ms $ $ $ ip -o a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1\ link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 1: lo inet 127.0.0.1/8 scope host lo\ valid_lft forever preferred_lft forever 1: lo inet6 ::1/128 scope host \ valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000\ link/ether 02:39:c9:aa:ca:f2 brd ff:ff:ff:ff:ff:ff 2: eth0 inet 192.168.100.3/24 brd 192.168.100.255 scope global eth0\ valid_lft forever preferred_lft forever 2: eth0 inet6 fe80::39:c9ff:feaa:caf2/64 scope link \ valid_lft forever preferred_lft forever $ $

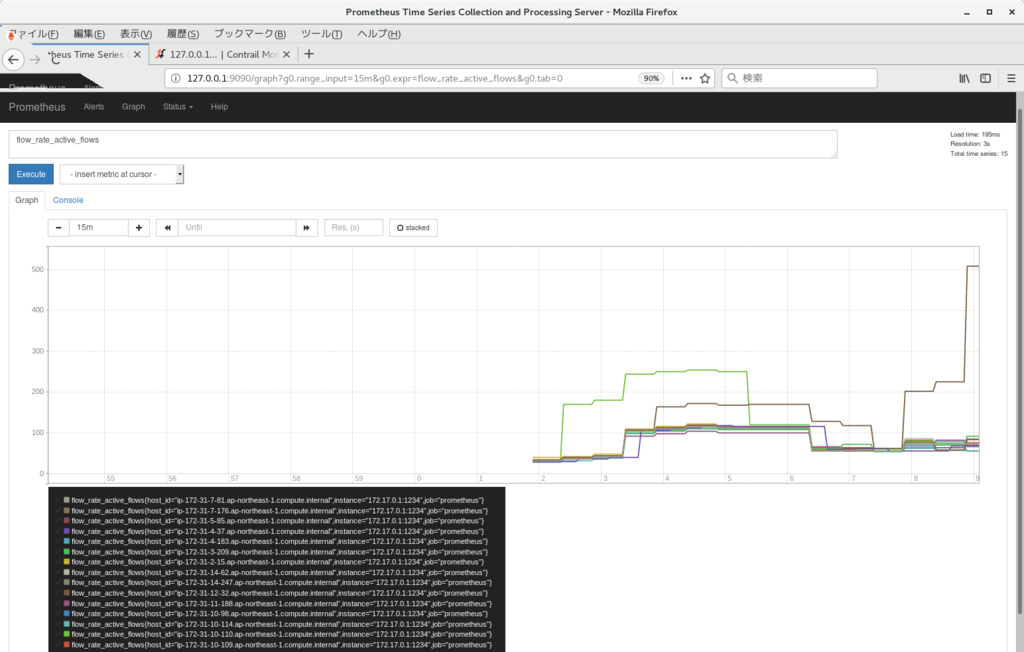

tungsten-fabric の prometheus連携

tungsten-fabric では、analytics ノードを使って、可視化/アラーム等を設定できるが、既存の監視システムがある場合は、APIを使ってパフォーマンス量を取得し、そちらで可視化を行う方が便利なケースがある。

サンプルとして、prometheus に取り込むケースを試してみている。

https://github.com/tnaganawa/tf-analytics-exporter

tungsten-fabric の作り上、vrouter 内にはパケット数カウント、エラーカウント、などの多くのカウンタがあるが、そちらの取得は以下の3つで実施できる。

1. cli (netlink で直接取得)

2. inspect (vrouter-agentから取得)

3. analytics (sandesh でanalytics に送付された値を取得)

※ 構成については以下を参照

https://github.com/Juniper/contrail-controller/blob/master/src/vnsw/agent/README

今回はシンプルなケースとして、analytics の UVEから値を取得する方法を試している。

結果として、以下のように、flow数 等をvrouter ごとに可視化できるようになった。

TungstenFabricのoVirt連携

(公式には連携対象に含まれていないが) oVirt と TungstenFabric の連携を試してみたので記載しておく。

https://github.com/tnaganawa/ovirt-tungstenfabric-integration

動作として、oVirt の neutron 連携機能を使用している。

https://www.ovirt.org/documentation/admin-guide/chap-External_Providers/

また、上記だけだと vrouter 側で実行される部分の処理が不足したため、vdsm hook によって、vif 作成が行われるように調整している。

思いの他、スムーズに連携できたので、既に oVirt を使ったことがあれば、External Provider の機能を試す意味で、実施してみてもよいのかもしれない。

TungstenFabricのsupport-info

TungstenFabric の動作を解析するとき、必要となる情報についてまとめておく。

まず、ファイルシステム上にある情報として、

/var/log/contrail, /etc/contrail 内の各ファイルと、各コンテナについての docker logs で、

各コンテナの状態について、ある程度の情報が分かるようになっている。

これ以外に、実際に cassandra の中に入っている情報や、プロセス内の詳細状況を調べる場合、以下から情報が取得できる。

config-api: http://(config-node):8082 inspect: http://(control-node):8083 http://(vrouter-node):8085

それぞれのポートにブラウザ / curl 等でアクセスすることで、各プロセスについて、詳細情報の取得が出来る。

※特に http://(control-node):8083/Snh_ShowRouteReq で、controller が持っている経路が取得できるので、まずはこの情報があるとよいかもしれない

※ inspect については、xml でダウンロードされるが、以下のコマンド等で html に変換できる yum install libxslt # centos7 curl https://raw.githubusercontent.com/Juniper/contrail-sandesh/master/library/common/webs/universal_parse.xsl xsltproc universal_parse.xsl xxx.xml > xxx.html

これ以外に、一般的な os 情報 (sosreport の結果、など) や、オーケストレーターの情報が必要になるケースがある。

※ kolla openstack の場合 /etc/kolla, /var/lib/docker/volumes/kolla_log 等

メール等で情報を投げる際には、まずはこの辺りから進めるのがよいのではなかろうか。

configdbのデータ冗長の動作

Tungsten Fabric (以下、TF) ではデータベースとしてcassandra を使っているが、この部分の冗長の動作を確認してみている。

環境としては、こちらのリンク (http://aaabbb-200904.hatenablog.jp/entry/2018/04/28/215922) の k8s+TF をベースに、TF controller 部分を3台に増やしている。

provider_config:

bms:

ssh_user: root

ssh_public_key: /root/.ssh/id_rsa.pub

ssh_private_key: /root/.ssh/id_rsa

domainsuffix: local

instances:

bms1:

provider: bms

roles:

config_database:

config:

control:

analytics_database:

analytics:

webui:

k8s_master:

kubemanager:

ip: 172.31.9.60

bms2:

provider: bms

roles:

config_database:

config:

control:

analytics_database:

analytics:

webui:

kubemanager:

ip: 172.31.5.117

bms3:

provider: bms

roles:

config_database:

config:

control:

analytics_database:

analytics:

webui:

kubemanager:

ip: 172.31.2.97

bms11:

provider: bms

roles:

vrouter:

k8s_node:

ip: 172.31.7.122

contrail_configuration:

CONTAINER_REGISTRY: opencontrailnightly

CONTRAIL_VERSION: latest

KUBERNETES_CLUSTER_PROJECT: {}cassandra のデータは、作成・変更時、ノード間でレプリケートされる動作になるのだが、その際のレプリカの数は KEYSPACE で定義されたreplication-factor によって決まる。

http://cassandra.apache.org/doc/latest/architecture/dynamo.html#replication-strategy

cqlsh で確認してみたところ、config では replication-factor 3 となっており、ノード数分のデータが保持されるようになっていた。

このため、万一 ディスクエラー等で データが破損しても、別のノードのデータからローカルのデータを復旧できるようになっている。

※ config (一部keyspace のみ抜粋)

# docker exec -it config_database_cassandra_1 bash

root@ip-172-31-9-60:/# cqlsh 127.0.0.1 9041

Connected to contrail_database at 127.0.0.1:9041.

[cqlsh 5.0.1 | Cassandra 3.11.2 | CQL spec 3.4.4 | Native protocol v4]

Use HELP for help.

cqlsh> DESCRIBE KEYSPACES

system_schema config_db_uuid system_traces dm_keyspace

system_auth to_bgp_keyspace useragent

system system_distributed svc_monitor_keyspace

cqlsh>

cqlsh> DESCRIBE to_bgp_keyspace

CREATE KEYSPACE to_bgp_keyspace WITH replication = {'class': 'SimpleStrategy', 'replication_factor': '3'} AND durable_writes = true;

(snip)上記 cqlsh は、cassandra のデータの確認・変更を行うコマンドだが、 cassandra のプロセスの状態については、nodetool というコマンドで確認できる。

nodetool info, nodetool status 等を実行することで、現在のデータ容量/ヒープ使用量、クラスタ内の各ノードの状態、等が確認出来る。

※ 7201 は configdb 用の管理ポートに対応

root@ip-172-31-9-60:/# nodetool -p 7201 info ID : c1c03c07-7553-419a-a902-a3ccacd69ea0 Gossip active : true Thrift active : true Native Transport active: true Load : 100.94 KiB <-- DBのデータ容量が 約 100KB であることを確認可能 Generation No : 1529829846 Uptime (seconds) : 1307 Heap Memory (MB) : 87.37 / 1936.00 <-- java heap の使用量が 87MB 程度であることを確認可能 Off Heap Memory (MB) : 0.00 Data Center : datacenter1 Rack : rack1 Exceptions : 0 Key Cache : entries 31, size 2.46 KiB, capacity 96 MiB, 417 hits, 523 requests, 0.797 recent hit rate, 14400 save period in seconds Row Cache : entries 0, size 0 bytes, capacity 0 bytes, 0 hits, 0 requests, NaN recent hit rate, 0 save period in seconds Counter Cache : entries 0, size 0 bytes, capacity 48 MiB, 0 hits, 0 requests, NaN recent hit rate, 7200 save period in seconds Chunk Cache : entries 16, size 1 MiB, capacity 452 MiB, 178 misses, 1651 requests, 0.892 recent hit rate, NaN microseconds miss latency Percent Repaired : 100.0% Token : (invoke with -T/--tokens to see all 256 tokens) root@ip-172-31-9-60:/# root@ip-172-31-9-60:/# nodetool -p 7201 status Datacenter: datacenter1 ======================= Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 172.31.9.60 578.4 KiB 256 100.0% c1c03c07-7553-419a-a902-a3ccacd69ea0 rack1 UN 172.31.5.117 434.21 KiB 256 100.0% 65f5c795-1f98-4e65-9d5e-10bacf2af7a4 rack1 UN 172.31.2.97 578.68 KiB 256 100.0% ba2b4ece-740b-4cd2-a157-fb9c2f1e0d65 rack1 root@ip-172-31-9-60:/# -> 3台ともUp/Normal の状態であることが確認可能

cassandra が 3台とも動作している状態であれば、webui, API等からconfigに変更が行われた際に、各DB間で自動的にレプリケートが行われるため、特に追加の作業は発生しない。

ただし、以下の状況の際には、レプリケートが自動で行われないため、レプリケートの実施のために、手動で、nodetool repair を発行する必要がある。

1. cassandra のうちの1台が 3時間 (デフォルト値: http://cassandra.apache.org/doc/latest/configuration/cassandra_config_file.html#max-hint-window-in-ms ) 以上停止し、その間に別のノードで書き込みが行われた場合

2. ディスクの破損等によって、cassandra のデータが消失した場合

※ 以下も参照

http://cassandra.apache.org/doc/latest/operating/repair.html

nodetool repair 実行時には以下のような出力が発生し、DBの修復が行われる

※ データ量によるが、インストール直後のconfigdb であれば10秒程度で完了する

root@ip-172-31-9-60:/# nodetool -p 7201 repair --full [2018-06-24 09:06:30,116] Starting repair command #1 (e204ce60-778d-11e8-a576-1d0021e0e5fc), repairing keyspace to_bgp_keyspace with repair options (parallelism: parallel, primary range: false, incremental: false, job threads: 1, ColumnFamilies: [], dataCenters: [], hosts: [], # of ranges: 768, pull repair: false) [2018-06-24 09:06:33,428] Repair session e24559d0-778d-11e8-a576-1d0021e0e5fc for range [(-5608140120549516339,-5575501037168442187], (-8319274023993233504,-8299210256863649616], (-4245142859867217208,-4240769915265534326], (1027014965654704718,1029169142596609729], (5022264611528068477,5035402145262803026], (-2571074354323857941,-2554982761970724031], (-4470575950908750827,-4449301038271372254], (-5384933651707468057,-5373862792440986216], (-5352184587049184101,-5350028384852015586], (-3332727924868644218,-3300828413730832178], (327426618458049693,333131064481674807], (-1962725683988 (snip)

また、データ破損時の repair の動作を確認するため、以下のようなコマンドで1台分のconfigdb の削除を行い、削除されたノード上で、nodetool repair を発行してみた。

(3台で実施)# docker-compose -f /etc/contrail/config_database/docker-compose.yaml down (1台で実施)# rm -rf /var/lib/docker/volumes/config_database_config_cassandra/_data/* (3台で実施)# docker-compose -f /etc/contrail/config_database/docker-compose.yaml up -d

この際、nodetool info の出力上は、削除後に repair 率が一旦下がり、repair 後に100%に戻る動作となった。

※ 削除直後 root@ip-172-31-9-60:/# nodetool -p 7201 info ID : c1c03c07-7553-419a-a902-a3ccacd69ea0 Gossip active : true Thrift active : true Native Transport active: true Load : 578.4 KiB Generation No : 1529832761 Uptime (seconds) : 349 Heap Memory (MB) : 183.52 / 1936.00 Off Heap Memory (MB) : 0.00 Data Center : datacenter1 Rack : rack1 Exceptions : 0 Key Cache : entries 144, size 13.6 KiB, capacity 96 MiB, 4798 hits, 4947 requests, 0.970 recent hit rate, 14400 save period in seconds Row Cache : entries 0, size 0 bytes, capacity 0 bytes, 0 hits, 0 requests, NaN recent hit rate, 0 save period in seconds Counter Cache : entries 0, size 0 bytes, capacity 48 MiB, 0 hits, 0 requests, NaN recent hit rate, 7200 save period in seconds Chunk Cache : entries 25, size 1.56 MiB, capacity 452 MiB, 39 misses, 5127 requests, 0.992 recent hit rate, NaN microseconds miss latency Percent Repaired : 93.39964148074145% <-- repair 率が低下 Token : (invoke with -T/--tokens to see all 256 tokens) root@ip-172-31-9-60:/# ※nodetool repair後 root@ip-172-31-9-60:/# nodetool -p 7201 info ID : c1c03c07-7553-419a-a902-a3ccacd69ea0 Gossip active : true Thrift active : true Native Transport active: true Load : 598.03 KiB Generation No : 1529832761 Uptime (seconds) : 460 Heap Memory (MB) : 91.59 / 1936.00 Off Heap Memory (MB) : 0.00 Data Center : datacenter1 Rack : rack1 Exceptions : 0 Key Cache : entries 150, size 14.2 KiB, capacity 96 MiB, 4816 hits, 4965 requests, 0.970 recent hit rate, 14400 save period in seconds Row Cache : entries 0, size 0 bytes, capacity 0 bytes, 0 hits, 0 requests, NaN recent hit rate, 0 save period in seconds Counter Cache : entries 0, size 0 bytes, capacity 48 MiB, 0 hits, 0 requests, NaN recent hit rate, 7200 save period in seconds Chunk Cache : entries 29, size 1.81 MiB, capacity 452 MiB, 43 misses, 5186 requests, 0.992 recent hit rate, 3371.628 microseconds miss latency Percent Repaired : 100.0% <-- repair 率が回復 Token : (invoke with -T/--tokens to see all 256 tokens) root@ip-172-31-9-60:/#

また、上記出力だけだと、どの部分が修復されたのか、等が確認できなかったため、再度同じ環境を作成して、cassandra のログを確認してみたところ、

以下のようにログ出力が行われており、nodetool repair 発行時にデータレプリケートが実施されていることが確認できた。

※ 05:49, 05:54 の2回 nodetool repair を実行したのだが、1度目の実行では、out of sync, performing streaming repair 等のログが発生して、データ修復が発生しており、2回目では修復が行われていないことがわかる

# docker exec -it config_database_cassandra_1 bash root@ip-172-31-3-118:/# grep 'RepairJobTask' /var/log/cassandra/system.log | grep -v RepairRunnable INFO [RepairJobTask:2] 2018-07-01 05:49:24,671 SyncTask.java:73 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 have 4 range(s) out of sync for route_target_table INFO [RepairJobTask:3] 2018-07-01 05:49:24,673 SyncTask.java:73 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 have 4 range(s) out of sync for route_target_table INFO [RepairJobTask:3] 2018-07-01 05:49:24,674 LocalSyncTask.java:71 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 4 ranges with /172.31.3.108 INFO [RepairJobTask:4] 2018-07-01 05:49:24,675 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for route_target_table INFO [RepairJobTask:2] 2018-07-01 05:49:24,675 LocalSyncTask.java:71 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 4 ranges with /172.31.15.136 INFO [RepairJobTask:1] 2018-07-01 05:49:24,707 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for service_chain_table INFO [RepairJobTask:5] 2018-07-01 05:49:24,707 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for service_chain_table INFO [RepairJobTask:2] 2018-07-01 05:49:24,716 StreamResultFuture.java:90 - [Stream #826ab040-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:3] 2018-07-01 05:49:24,719 StreamResultFuture.java:90 - [Stream #826afe60-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:6] 2018-07-01 05:49:24,721 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for service_chain_table INFO [RepairJobTask:5] 2018-07-01 05:49:24,729 RepairJob.java:143 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] service_chain_table is fully synced INFO [RepairJobTask:5] 2018-07-01 05:49:24,743 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for service_chain_uuid_table INFO [RepairJobTask:1] 2018-07-01 05:49:24,744 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for service_chain_uuid_table INFO [RepairJobTask:4] 2018-07-01 05:49:24,745 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for service_chain_uuid_table INFO [RepairJobTask:1] 2018-07-01 05:49:24,745 RepairJob.java:143 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] service_chain_uuid_table is fully synced INFO [RepairJobTask:4] 2018-07-01 05:49:24,750 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for service_chain_ip_address_table INFO [RepairJobTask:5] 2018-07-01 05:49:24,751 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for service_chain_ip_address_table INFO [RepairJobTask:6] 2018-07-01 05:49:24,752 SyncTask.java:66 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for service_chain_ip_address_table INFO [RepairJobTask:5] 2018-07-01 05:49:24,752 RepairJob.java:143 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] service_chain_ip_address_table is fully synced INFO [RepairJobTask:3] 2018-07-01 05:49:24,972 RepairJob.java:143 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] route_target_table is fully synced INFO [RepairJobTask:3] 2018-07-01 05:49:24,974 RepairSession.java:270 - [repair #806c9650-7cf2-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:3] 2018-07-01 05:49:27,364 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for dm_pnf_resource_table INFO [RepairJobTask:2] 2018-07-01 05:49:27,365 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for dm_pnf_resource_table INFO [RepairJobTask:1] 2018-07-01 05:49:27,366 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for dm_pnf_resource_table INFO [RepairJobTask:2] 2018-07-01 05:49:27,366 RepairJob.java:143 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] dm_pnf_resource_table is fully synced INFO [RepairJobTask:1] 2018-07-01 05:49:27,405 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for dm_pr_vn_ip_table INFO [RepairJobTask:3] 2018-07-01 05:49:27,406 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for dm_pr_vn_ip_table INFO [RepairJobTask:4] 2018-07-01 05:49:27,407 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for dm_pr_vn_ip_table INFO [RepairJobTask:3] 2018-07-01 05:49:27,407 RepairJob.java:143 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] dm_pr_vn_ip_table is fully synced INFO [RepairJobTask:4] 2018-07-01 05:49:27,423 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for dm_pr_ae_id_table INFO [RepairJobTask:1] 2018-07-01 05:49:27,424 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for dm_pr_ae_id_table INFO [RepairJobTask:2] 2018-07-01 05:49:27,425 SyncTask.java:66 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for dm_pr_ae_id_table INFO [RepairJobTask:1] 2018-07-01 05:49:27,425 RepairJob.java:143 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] dm_pr_ae_id_table is fully synced INFO [RepairJobTask:1] 2018-07-01 05:49:27,426 RepairSession.java:270 - [repair #82d5ce20-7cf2-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:2] 2018-07-01 05:49:28,374 SyncTask.java:66 - [repair #8439f200-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for useragent_keyval_table INFO [RepairJobTask:1] 2018-07-01 05:49:28,375 SyncTask.java:66 - [repair #8439f200-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for useragent_keyval_table INFO [RepairJobTask:4] 2018-07-01 05:49:28,380 SyncTask.java:66 - [repair #8439f200-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for useragent_keyval_table INFO [RepairJobTask:3] 2018-07-01 05:49:28,381 RepairJob.java:143 - [repair #8439f200-7cf2-11e8-ad88-a1b725c94e34] useragent_keyval_table is fully synced INFO [RepairJobTask:3] 2018-07-01 05:49:28,382 RepairSession.java:270 - [repair #8439f200-7cf2-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:4] 2018-07-01 05:49:30,803 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for service_instance_table INFO [RepairJobTask:3] 2018-07-01 05:49:30,803 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for service_instance_table INFO [RepairJobTask:2] 2018-07-01 05:49:30,807 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for service_instance_table INFO [RepairJobTask:3] 2018-07-01 05:49:30,808 RepairJob.java:143 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] service_instance_table is fully synced INFO [RepairJobTask:2] 2018-07-01 05:49:30,893 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for healthmonitor_table INFO [RepairJobTask:4] 2018-07-01 05:49:30,904 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for healthmonitor_table INFO [RepairJobTask:5] 2018-07-01 05:49:30,905 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for healthmonitor_table INFO [RepairJobTask:4] 2018-07-01 05:49:30,905 RepairJob.java:143 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] healthmonitor_table is fully synced INFO [RepairJobTask:5] 2018-07-01 05:49:31,007 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for pool_table INFO [RepairJobTask:2] 2018-07-01 05:49:31,007 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for pool_table INFO [RepairJobTask:3] 2018-07-01 05:49:31,008 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for pool_table INFO [RepairJobTask:2] 2018-07-01 05:49:31,008 RepairJob.java:143 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] pool_table is fully synced INFO [RepairJobTask:3] 2018-07-01 05:49:31,071 SyncTask.java:66 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for loadbalancer_table INFO [RepairJobTask:5] 2018-07-01 05:49:31,071 SyncTask.java:73 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 have 3 range(s) out of sync for loadbalancer_table INFO [RepairJobTask:5] 2018-07-01 05:49:31,072 LocalSyncTask.java:71 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 3 ranges with /172.31.3.108 INFO [RepairJobTask:4] 2018-07-01 05:49:31,073 SyncTask.java:73 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 have 3 range(s) out of sync for loadbalancer_table INFO [RepairJobTask:4] 2018-07-01 05:49:31,073 LocalSyncTask.java:71 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 3 ranges with /172.31.15.136 INFO [RepairJobTask:4] 2018-07-01 05:49:31,075 StreamResultFuture.java:90 - [Stream #863acb10-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:5] 2018-07-01 05:49:31,075 StreamResultFuture.java:90 - [Stream #863aa400-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:5] 2018-07-01 05:49:31,188 RepairJob.java:143 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] loadbalancer_table is fully synced INFO [RepairJobTask:5] 2018-07-01 05:49:31,189 RepairSession.java:270 - [repair #84ca9940-7cf2-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:4] 2018-07-01 05:49:33,099 SyncTask.java:66 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for obj_fq_name_table INFO [RepairJobTask:1] 2018-07-01 05:49:33,100 SyncTask.java:73 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 have 32 range(s) out of sync for obj_fq_name_table INFO [RepairJobTask:1] 2018-07-01 05:49:33,101 LocalSyncTask.java:71 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 32 ranges with /172.31.15.136 INFO [RepairJobTask:2] 2018-07-01 05:49:33,105 SyncTask.java:73 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 have 32 range(s) out of sync for obj_fq_name_table INFO [RepairJobTask:1] 2018-07-01 05:49:33,105 StreamResultFuture.java:90 - [Stream #87703dd0-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:2] 2018-07-01 05:49:33,105 LocalSyncTask.java:71 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 32 ranges with /172.31.3.108 INFO [RepairJobTask:2] 2018-07-01 05:49:33,106 StreamResultFuture.java:90 - [Stream #8770da10-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:1] 2018-07-01 05:49:33,169 SyncTask.java:66 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for obj_uuid_table INFO [RepairJobTask:4] 2018-07-01 05:49:33,177 SyncTask.java:73 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 have 120 range(s) out of sync for obj_uuid_table INFO [RepairJobTask:4] 2018-07-01 05:49:33,177 LocalSyncTask.java:71 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 120 ranges with /172.31.3.108 INFO [RepairJobTask:3] 2018-07-01 05:49:33,179 SyncTask.java:73 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 have 120 range(s) out of sync for obj_uuid_table INFO [RepairJobTask:3] 2018-07-01 05:49:33,179 LocalSyncTask.java:71 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 120 ranges with /172.31.15.136 INFO [RepairJobTask:3] 2018-07-01 05:49:33,182 StreamResultFuture.java:90 - [Stream #877c24b0-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:4] 2018-07-01 05:49:33,184 StreamResultFuture.java:90 - [Stream #877bd690-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:4] 2018-07-01 05:49:33,348 SyncTask.java:66 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for obj_shared_table INFO [RepairJobTask:1] 2018-07-01 05:49:33,349 SyncTask.java:73 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 have 3 range(s) out of sync for obj_shared_table INFO [RepairJobTask:1] 2018-07-01 05:49:33,349 LocalSyncTask.java:71 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 3 ranges with /172.31.3.108 INFO [RepairJobTask:1] 2018-07-01 05:49:33,350 StreamResultFuture.java:90 - [Stream #87961550-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:2] 2018-07-01 05:49:33,350 SyncTask.java:73 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 have 3 range(s) out of sync for obj_shared_table INFO [RepairJobTask:2] 2018-07-01 05:49:33,350 LocalSyncTask.java:71 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Performing streaming repair of 3 ranges with /172.31.15.136 INFO [RepairJobTask:2] 2018-07-01 05:49:33,351 StreamResultFuture.java:90 - [Stream #87963c60-7cf2-11e8-ad88-a1b725c94e34] Executing streaming plan for Repair INFO [RepairJobTask:2] 2018-07-01 05:49:33,457 RepairJob.java:143 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] obj_fq_name_table is fully synced INFO [RepairJobTask:2] 2018-07-01 05:49:33,488 RepairJob.java:143 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] obj_uuid_table is fully synced INFO [RepairJobTask:2] 2018-07-01 05:49:33,526 RepairJob.java:143 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] obj_shared_table is fully synced INFO [RepairJobTask:2] 2018-07-01 05:49:33,528 RepairSession.java:270 - [repair #866bc620-7cf2-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:1] 2018-07-01 05:49:34,098 SyncTask.java:66 - [repair #87cf74d0-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for events INFO [RepairJobTask:2] 2018-07-01 05:49:34,098 RepairJob.java:143 - [repair #87cf74d0-7cf2-11e8-ad88-a1b725c94e34] events is fully synced INFO [RepairJobTask:2] 2018-07-01 05:49:34,110 SyncTask.java:66 - [repair #87cf74d0-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for sessions INFO [RepairJobTask:3] 2018-07-01 05:49:34,111 RepairJob.java:143 - [repair #87cf74d0-7cf2-11e8-ad88-a1b725c94e34] sessions is fully synced INFO [RepairJobTask:3] 2018-07-01 05:49:34,113 RepairSession.java:270 - [repair #87cf74d0-7cf2-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:3] 2018-07-01 05:49:34,419 SyncTask.java:66 - [repair #8802e0e0-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for sessions INFO [RepairJobTask:2] 2018-07-01 05:49:34,420 RepairJob.java:143 - [repair #8802e0e0-7cf2-11e8-ad88-a1b725c94e34] sessions is fully synced INFO [RepairJobTask:2] 2018-07-01 05:49:34,424 SyncTask.java:66 - [repair #8802e0e0-7cf2-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for events INFO [RepairJobTask:3] 2018-07-01 05:49:34,424 RepairJob.java:143 - [repair #8802e0e0-7cf2-11e8-ad88-a1b725c94e34] events is fully synced INFO [RepairJobTask:3] 2018-07-01 05:49:34,425 RepairSession.java:270 - [repair #8802e0e0-7cf2-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:5] 2018-07-01 05:54:42,625 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for service_chain_ip_address_table INFO [RepairJobTask:3] 2018-07-01 05:54:42,625 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for service_chain_ip_address_table INFO [RepairJobTask:2] 2018-07-01 05:54:42,626 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for service_chain_ip_address_table INFO [RepairJobTask:3] 2018-07-01 05:54:42,632 RepairJob.java:143 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] service_chain_ip_address_table is fully synced INFO [RepairJobTask:2] 2018-07-01 05:54:42,748 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for service_chain_table INFO [RepairJobTask:5] 2018-07-01 05:54:42,749 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for service_chain_table INFO [RepairJobTask:4] 2018-07-01 05:54:42,749 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for service_chain_table INFO [RepairJobTask:5] 2018-07-01 05:54:42,750 RepairJob.java:143 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] service_chain_table is fully synced INFO [RepairJobTask:4] 2018-07-01 05:54:42,796 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for service_chain_uuid_table INFO [RepairJobTask:2] 2018-07-01 05:54:42,797 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for service_chain_uuid_table INFO [RepairJobTask:3] 2018-07-01 05:54:42,798 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for service_chain_uuid_table INFO [RepairJobTask:2] 2018-07-01 05:54:42,798 RepairJob.java:143 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] service_chain_uuid_table is fully synced INFO [RepairJobTask:3] 2018-07-01 05:54:42,833 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for route_target_table INFO [RepairJobTask:4] 2018-07-01 05:54:42,833 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for route_target_table INFO [RepairJobTask:5] 2018-07-01 05:54:42,834 SyncTask.java:66 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for route_target_table INFO [RepairJobTask:4] 2018-07-01 05:54:42,835 RepairJob.java:143 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] route_target_table is fully synced INFO [RepairJobTask:4] 2018-07-01 05:54:42,837 RepairSession.java:270 - [repair #3edda390-7cf3-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:1] 2018-07-01 05:54:44,466 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for dm_pr_vn_ip_table INFO [RepairJobTask:2] 2018-07-01 05:54:44,467 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for dm_pr_vn_ip_table INFO [RepairJobTask:5] 2018-07-01 05:54:44,471 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for dm_pr_vn_ip_table INFO [RepairJobTask:4] 2018-07-01 05:54:44,472 RepairJob.java:143 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] dm_pr_vn_ip_table is fully synced INFO [RepairJobTask:2] 2018-07-01 05:54:44,477 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for dm_pnf_resource_table INFO [RepairJobTask:5] 2018-07-01 05:54:44,478 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for dm_pnf_resource_table INFO [RepairJobTask:7] 2018-07-01 05:54:44,478 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for dm_pnf_resource_table INFO [RepairJobTask:5] 2018-07-01 05:54:44,480 RepairJob.java:143 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] dm_pnf_resource_table is fully synced INFO [RepairJobTask:6] 2018-07-01 05:54:44,495 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for dm_pr_ae_id_table INFO [RepairJobTask:5] 2018-07-01 05:54:44,496 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for dm_pr_ae_id_table INFO [RepairJobTask:7] 2018-07-01 05:54:44,497 SyncTask.java:66 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for dm_pr_ae_id_table INFO [RepairJobTask:5] 2018-07-01 05:54:44,499 RepairJob.java:143 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] dm_pr_ae_id_table is fully synced INFO [RepairJobTask:5] 2018-07-01 05:54:44,505 RepairSession.java:270 - [repair #4030b070-7cf3-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:1] 2018-07-01 05:54:45,183 SyncTask.java:66 - [repair #4134b2f0-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for useragent_keyval_table INFO [RepairJobTask:1] 2018-07-01 05:54:45,186 SyncTask.java:66 - [repair #4134b2f0-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for useragent_keyval_table INFO [RepairJobTask:3] 2018-07-01 05:54:45,189 SyncTask.java:66 - [repair #4134b2f0-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for useragent_keyval_table INFO [RepairJobTask:1] 2018-07-01 05:54:45,190 RepairJob.java:143 - [repair #4134b2f0-7cf3-11e8-ad88-a1b725c94e34] useragent_keyval_table is fully synced INFO [RepairJobTask:1] 2018-07-01 05:54:45,191 RepairSession.java:270 - [repair #4134b2f0-7cf3-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:1] 2018-07-01 05:54:47,073 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for healthmonitor_table INFO [RepairJobTask:4] 2018-07-01 05:54:47,073 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for healthmonitor_table INFO [RepairJobTask:2] 2018-07-01 05:54:47,074 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for healthmonitor_table INFO [RepairJobTask:4] 2018-07-01 05:54:47,077 RepairJob.java:143 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] healthmonitor_table is fully synced INFO [RepairJobTask:1] 2018-07-01 05:54:47,114 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for service_instance_table INFO [RepairJobTask:5] 2018-07-01 05:54:47,115 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for service_instance_table INFO [RepairJobTask:2] 2018-07-01 05:54:47,115 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for service_instance_table INFO [RepairJobTask:3] 2018-07-01 05:54:47,116 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for pool_table INFO [RepairJobTask:5] 2018-07-01 05:54:47,119 RepairJob.java:143 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] service_instance_table is fully synced INFO [RepairJobTask:5] 2018-07-01 05:54:47,120 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for pool_table INFO [RepairJobTask:6] 2018-07-01 05:54:47,121 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for pool_table INFO [RepairJobTask:5] 2018-07-01 05:54:47,121 RepairJob.java:143 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] pool_table is fully synced INFO [RepairJobTask:6] 2018-07-01 05:54:47,182 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for loadbalancer_table INFO [RepairJobTask:4] 2018-07-01 05:54:47,183 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for loadbalancer_table INFO [RepairJobTask:3] 2018-07-01 05:54:47,183 SyncTask.java:66 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for loadbalancer_table INFO [RepairJobTask:4] 2018-07-01 05:54:47,184 RepairJob.java:143 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] loadbalancer_table is fully synced INFO [RepairJobTask:4] 2018-07-01 05:54:47,185 RepairSession.java:270 - [repair #41948630-7cf3-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:4] 2018-07-01 05:54:48,708 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for obj_shared_table INFO [RepairJobTask:1] 2018-07-01 05:54:48,709 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for obj_shared_table INFO [RepairJobTask:2] 2018-07-01 05:54:48,711 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for obj_shared_table INFO [RepairJobTask:1] 2018-07-01 05:54:48,712 RepairJob.java:143 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] obj_shared_table is fully synced INFO [RepairJobTask:2] 2018-07-01 05:54:48,748 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for obj_fq_name_table INFO [RepairJobTask:4] 2018-07-01 05:54:48,749 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for obj_fq_name_table INFO [RepairJobTask:3] 2018-07-01 05:54:48,750 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for obj_fq_name_table INFO [RepairJobTask:4] 2018-07-01 05:54:48,751 RepairJob.java:143 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] obj_fq_name_table is fully synced INFO [RepairJobTask:3] 2018-07-01 05:54:48,880 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.15.136 are consistent for obj_uuid_table INFO [RepairJobTask:2] 2018-07-01 05:54:48,880 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for obj_uuid_table INFO [RepairJobTask:1] 2018-07-01 05:54:48,881 SyncTask.java:66 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for obj_uuid_table INFO [RepairJobTask:2] 2018-07-01 05:54:48,881 RepairJob.java:143 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] obj_uuid_table is fully synced INFO [RepairJobTask:2] 2018-07-01 05:54:48,883 RepairSession.java:270 - [repair #42d06190-7cf3-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:1] 2018-07-01 05:54:49,330 SyncTask.java:66 - [repair #43c2b0d0-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for sessions INFO [RepairJobTask:3] 2018-07-01 05:54:49,330 RepairJob.java:143 - [repair #43c2b0d0-7cf3-11e8-ad88-a1b725c94e34] sessions is fully synced INFO [RepairJobTask:1] 2018-07-01 05:54:49,335 SyncTask.java:66 - [repair #43c2b0d0-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.3.108 and /172.31.3.118 are consistent for events INFO [RepairJobTask:3] 2018-07-01 05:54:49,335 RepairJob.java:143 - [repair #43c2b0d0-7cf3-11e8-ad88-a1b725c94e34] events is fully synced INFO [RepairJobTask:3] 2018-07-01 05:54:49,336 RepairSession.java:270 - [repair #43c2b0d0-7cf3-11e8-ad88-a1b725c94e34] Session completed successfully INFO [RepairJobTask:1] 2018-07-01 05:54:49,639 SyncTask.java:66 - [repair #43e61752-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for events INFO [RepairJobTask:2] 2018-07-01 05:54:49,639 RepairJob.java:143 - [repair #43e61752-7cf3-11e8-ad88-a1b725c94e34] events is fully synced INFO [RepairJobTask:2] 2018-07-01 05:54:49,640 SyncTask.java:66 - [repair #43e61752-7cf3-11e8-ad88-a1b725c94e34] Endpoints /172.31.15.136 and /172.31.3.118 are consistent for sessions INFO [RepairJobTask:3] 2018-07-01 05:54:49,641 RepairJob.java:143 - [repair #43e61752-7cf3-11e8-ad88-a1b725c94e34] sessions is fully synced INFO [RepairJobTask:3] 2018-07-01 05:54:49,641 RepairSession.java:270 - [repair #43e61752-7cf3-11e8-ad88-a1b725c94e34] Session completed successfully root@ip-172-31-3-118:/#

定期的に nodetool repair を実施しておけば (3台同時にデータ破損、等の場合を除いて) コンフィグデータは複数ノード上にレプリカが保持される、といってよいのではなかろうか。

transparent service chain

Tungsten Fabric では、以前記載したL3 のサービスチェイン (http://aaabbb-200904.hatenablog.jp/entry/2017/10/29/223844) に加えて、L2のVNFのサービスチェインにも対応している。

http://www.opencontrail.org/building-and-testing-layer2-service-images-for-opencontrail/

この場合、vrouter と vrouter の間で、L2 (linux bridgeのような動作) のVNFを挟む構成になるのだが、この際、実際には、送信元のvrouter と 送信先のvrouter の mac address をサービスチェイン用の値に書き換え、そちらを使って、VNFを通すような仕組みとなっている。

※ vrouter 自体は、この場合もルーターとして振る舞うため、left, right のサブネットは異なっていてもよいことに注意

設定方法としては、リンク先のブログと同様で、

Service Template で、'Mode': 'Transparent' を選ぶ必要があること以外は、L3サービスチェインと同じ操作となる。

※ VNFとして、VyOSで試す場合、以下のコンフィグを設定することで、L2サービスチェインが動作することを確認している

set interfaces bridge br0 set interfaces ethernet eth1 bridge-group bridge br0 set interfaces ethernet eth2 bridge-group bridge br0